A TM1 cookbook

A TM1 cookbook

This is a collaborative writing effort: users can collaborate writing the articles of the book, positioning the articles in the right order, and reviewing or modifying articles previously written. So when you have some information to share or when you read an article of the book and you didn't like it, or if you think a certain article could have been written better, you can do something about it.

The articles are divided into the following sections defined by roles:

Users

UsersTips for TM1 users:

Cube Viewer tips

Cube Viewer tipsColumns width

When you have a view with a lot of dimensions displayed, it happens that most of the screen estate is consumed by the dimensions columns and you find yourself scrolling a lot left and right to read the data points.

To ease that problem:

-

choose the shortest aliases for all dimensions from the subset editor

-

choose subset names as short as possible

-

uncheck the Options->Expand Row Header

Excel/Perspectives Tips

Excel/Perspectives TipsSynchronising Excel data

. to reduce the probability of crashing your Excel, disable Automatic Calculation : Go to Tools->Options-> Calculation Tab then click on the Manual button

You can use:

- F9 to manually refresh all the open workbooks

- Shift F9 to refresh only the current worksheet

- F2 and Enter key (i.e. edit cell) to refresh only 1 cell

In TM1 9.4.1, the spreadsheets will recalculate automatically when opening a workbook, or changing a SUBNM despite automatic calc is disabled.

From the Excel top menu, click Insert->Name->Define

.In the Define Name dialog box, input TM1REBUILDOPTION

.Set the value in the Refers to box to 0 and click OK.

Avoid multiple dynamic slices

Multiple dynamic TM1 slices in several sheets in a workbook might render Excel unstable and crash. Some references might get messed up too.

E10) Data directory not found

If you are getting the e10) data directory not found popup error when loading Perspectives, you need to define the data directory of your local server, even if you do not want to run a local server.

Go to Files->Options and enter a valid folder in the Data Directory box. if that box is greyed out then you need to edit manually the variable in your tm1p.ini stored on your PC.

DataBaseDirectory= C:\some\path\

Alternatively you can modify the setting directly from Excel with the following VBA code:

Application.Run("OPTSET", "DatabaseDirectory", "C:\some\path")

Publish Views

Publish ViewsPublishing users' view is still far from a quick and simple process in TM1.

First, the admin cannot see other users' views.

Second, users cannot publish their own views themselves.

So publishing views always require a direct intervention from the admin, well not anymore :)

1. create a process with the following code

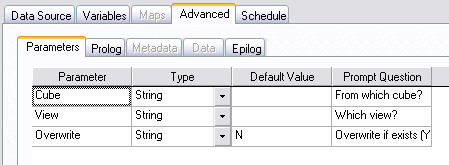

in Advanced->Parameters Tab

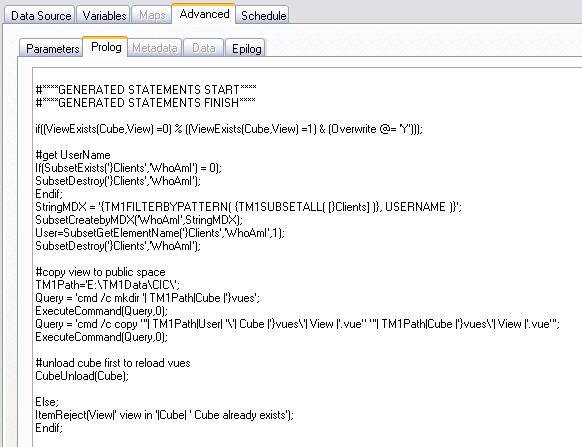

in the Advanced->Prolog Tab

if((ViewExists(Cube,View) =0) % ((ViewExists(Cube,View) =1) & (Overwrite @= 'Y')));

#get UserName

If(SubsetExists('}Clients','WhoAmI') = 0);

SubsetDestroy('}Clients','WhoAmI');

Endif;

StringMDX = '{TM1FILTERBYPATTERN( {TM1SUBSETALL( [}Clients] )}, USERNAME )}';

SubsetCreatebyMDX('WhoAmI',StringMDX);

User=SubsetGetElementName('}Clients','WhoAmI',1);

SubsetDestroy('}Clients','WhoAmI');

#copy view to public space

TM1Path='E:\TM1Data\TM1Server\';

Query = 'cmd /c mkdir '| TM1Path|Cube |'}vues';

ExecuteCommand(Query,0);

Query = 'cmd /c copy "'| TM1Path|User| '\'| Cube |'}vues\'| View |'.vue" "'| TM1Path|Cube |'}vues\'| View |'.vue"';

ExecuteCommand(Query,0);

#unload cube first to reload vues

CubeUnload(Cube);

Else;

ItemReject(View|' view in '|Cube| ' Cube already exists');

Endif;

2. change the TM1Path and save

3. in Server Explorer, Process->Security Assignment, set that process as Read for all groups that should be allowed to publish

Now your users can publish their views on their own by executing this process, they just need to enter the name of the cube and the view to publish.

Alternatively, the code in the above Prolog Tab can be simplified and replaced with these 5 lines:

if((ViewExists(Cube,View) =0) % ((ViewExists(Cube,View) =1) & (Overwrite @= 'Y'))); PublishView(Cube,View,1,1); Else; ItemReject(View|' view in '|Cube| ' Cube already exists'); Endif;

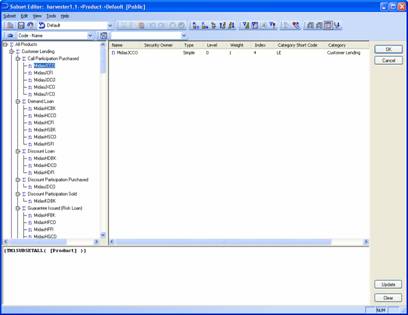

Subset Editor

Subset EditorTree View

To display consolidated elements below their children: View -> Expand Above

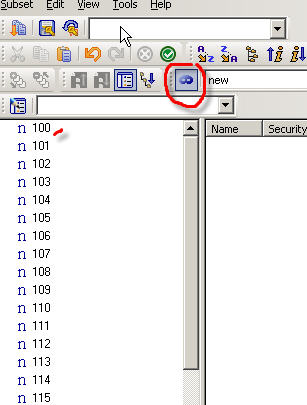

Faster Subset Editor

In order to get a faster response from the subset editor, disable the Properties Window:

View -> Properties Window

or click the "Display Properties Window" from the toolbar

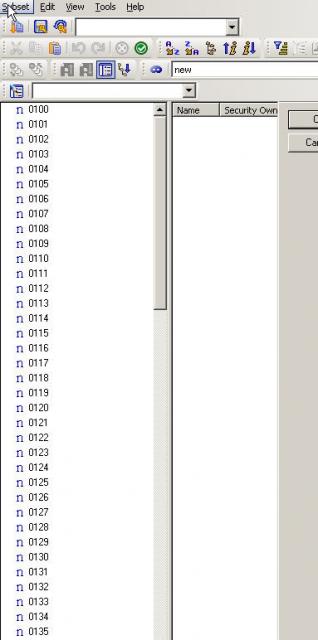

Updating an existing subset

to add one or more elements in an existing subset without recreating it:

from the subset editor

- Edit->Insert Subset

- Select the elements

- Click OK to Save as private Subset1

now Subset1 is added to your existing subset

- Expand Subset1

- Click on the Subset1 consolidation element then delete

- You can now save your subset with the new elements

TM1Web

TM1Web- To get cube views to display much faster in tm1web: subsets of the dimensions at the top must contain only 1 element each

- Clicking on the icons in the Export dropdown menu will have no effect, only clicking on the associated text on the right "slice/snapshot/pdf" will start an export

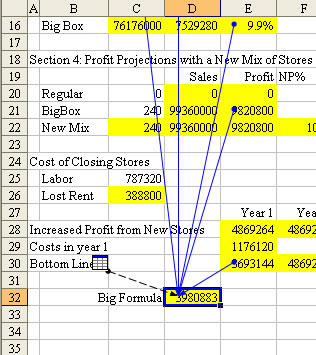

Tracing TM1 references

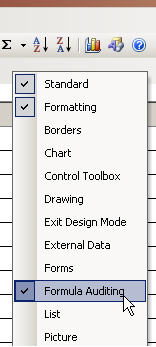

Tracing TM1 referencesHomemade TM1 reports can get quite convoluted and users might get a hard time updating them as it is difficult to tell where some TM1 formulas are pointing to.

The excel formula auditing toolbar can be useful in such situations.

- right click next to the top bar to bring up the bars menu

- select "Formula Auditing" bar

- Activate tracing arrows to highlight precedents

This is quite useful to get the scope of a cube or dimension reference in a report or to see which elements a DBRW formula is made of.

Developers

Developerssection dedicated to Developers

A closer look at dynamic slices

A closer look at dynamic slicesDynamic slices can be quite useful when the elements you display in your reports evolve over time, they automatically update with the new elements.

The following article will try to dig into the parameters that define these slices and show some of the possibilities to interact with these. The idea was originally submitted by Philip Bichard.

The dynamic slice parameters stored in the names list of the worksheet

to display these in Excel: insert -> name -> paste -> paste list

most of the parameters are defined as SLxxCyy

xx is the slice reference 01, 02, 03... for as many slices as there are in the report

yy is the stacked dimension reference

ex: SL01C01 relates to the first stacked dimension on top of columns

SL01C02 relates to the 2nd stacked dimension from the top

SL02R01 relates to the first stacked dimension for rows on the most left of the 2nd slice

CUBE01RNG location of the cell hosting the name of the cube referenced

SL01C01DIMNM subset used or dimension name if not using a saved subset

SL01C01ELEMS_01 list of elements to display

SL01C01EXPANDUP 1/ 0 trigger consolidations as collapsed or expanded

SL01C01FMTNM name of the elements format to use

SL01C01HND ???

SL01C01IDXS_01 ???

SL01C01RNG range for the stacked dimension

SL01CPRX_01 ???

SL01DATARNG range for DBRW cells

SL01FILT filter settings

SL01R01ALIAS name of the alias used

SL01R01DIMNM

SL01R01ELEMS_01 list of elements to be displayed

SL01R01ELEMS_xx ...

SL01R01EXPANDUP

SL01R01HND

SL01R01IDXS_01

SL01R01NM subset name

SL01R01RNG section boundary range

SL01RPRX_01

SL01TIDXS_01 ???

SL01TPRX_01 ???

SL01VIEWHND ???

SL01VIEWIDX ???

SL01ZEROSUPCOL zero suppress on columns trigger

SL01ZEROSUPROW zero suppress on rows trigger

SX01C01ENABLE trigger

SX01C01IDX

SX01C01WD

SX01R01ENABLE trigger

SX01R01HT

SX01R01IDX trigger

SX01RNG section boundary range

SXBNDDSP section boundary display trigger

TITLE1 subnm formula for 1st dimension

TITLE1NM subset

TITLE1RNG cell location

TITLE2

TITLE2NM

TITLE2RNG

TITLE3

TITLE3ALIAS alias to display for the dimension

TITLE3RNG

redefining the following name SL01FILT from

SL01FILT ="FUNCTION_PARAM=0.000000€SORT_ORDER=desc€TUPLE_STR=[Sales Measures].[Sales Units]"

to

SL01FILT ="FUNCTION_PARAM=0.000000€SORT_ORDER=asc€TUPLE_STR=[Sales Measures].[Sales Cost]"

would change the column on which the sorting is made from sales units to sales cost, and also the order from descending to ascending.

One could also achieve a similar result with an MDX expression.

The following code will change the subset from the dimension on the row stack to the predefined "Level Zero Dynamic" subset so all elements will then be displayed.

Sub ChangeRowSubset() ActiveSheet.Names.Add Name:="SL01R01NM", RefersTo:="Level Zero Dynamic" Application.Run "TM1REFRESH" End Sub

Note, you need to use TM1REFRESH or Alt-F9 to get the slice to rebuild itself, TM1RECALC (F9) would only update the DBRW formulas.

Dynamic slices will break with the following popup "No values available" because of some element not existing anymore or not having any values for that specific slice.

An easy fix is to disconnect from the TM1 server, load the report, remove the element causing trouble from the slice and names table, then reconnect to the TM1 server, the dynamic slice will refresh on reconnect just fine.

Attributes

AttributesEdit Attributes...

if you get the following message "This operation accesses a dimension containing a large number of elements. The uploading of these elements from the server may take a few minutes. Continue?"

Editing directly the attributes cube is much faster:

- View -> Display Control Objects

- Open the cube }ElementAttributes_dimension

- Modify the required fields like in any cube

Add new Attribute

for large dimensions, it is faster to just create a temporary TI process with the following code in the Prolog

ATTRINSERT('Model',, 'InteriorColor', 'S');

This example creates the InteriorColor string attribute for the Model dimension.

Checking if an attribute/alias already exists

let's say we want to create an alias Code for the dimension Customer.

In Advanced->Prolog Tab:

If(DIMIX('}Element_Attributes_Customer','Code') = 0);

AttrInsert('Customer','','Code','A');

Endif;

So you do not have to worry about the AttrInsert generating an error if you ever have to run the process again.

After updating aliases in TM1, you will need to clear the Excel cache to see the changes by running the macro m_clear or restart excel.

Bulk reporting

Bulk reportingThe TM1->Print Report function from Perspectives is useful to generate static reports in bulk for a given set of elements.

The following code is mimicking and extending that functionality to achieve bulk reporting for a TM1 report in a more flexible fashion.

For example you could get a report based on the branches of a company to be saved in each respective branch documents folder instead of getting them all dumped in a single folder or you could also get each branch report emailed to its own branch manager.

Here is the Excel VBA code:

Option Explicit

Sub BulkReport()

'http://www.vbaexpress.com/kb/getarticle.php?kb_id=359

'+ admin@bihints mods

'+ some of Martin Ryan code

Dim NewName As String

Dim nm As Name

Dim ws As Worksheet

Dim TM1Element As String

Dim i As Integer

Dim myDim As String

Dim server As String

Dim fullDim As String

Dim total As Long

Dim folder As String

Dim destination As String

destination = "\\path\to\Your Branch Documents\"

server = "tm1server"

myDim = "Store"

fullDim = server & ":" & myDim

If Run("dimix", server & ":}Dimensions", myDim) = 0 Then

MsgBox "The dimension does not exist on this server"

Exit Sub

End If

'loop over all elements of the branch dimension

For i = 1 To Run("dimsiz", fullDim)

TM1Element = Run("dimnm", fullDim, i)

'see if there are any sales for that branch

total = Application.Run("DBRW", Range("$B$1").Value, "All Staff", Range("$B$7").Value, TM1Element, Range("$B$8").Value, "Total Sales")

'process only level 0 elements and sales <> 0 otherwise skip it

If ((Application.Run("ellev", fullDim, TM1Element) = 0) And (total <> 0)) Then

'update the dimension

Range("$B$9").Value = "=SUBNM(""" & fullDim & """, """", """ & TM1Element & """, ""Name"")"

'refresh worksheet

Application.Run ("TM1RECALC")

With Application

.ScreenUpdating = False

' Copy specific sheets

' *SET THE SHEET NAMES TO COPY BELOW*

' Array("Sheet Name", "Another sheet name", "And Another"))

' Sheet names go inside quotes, seperated by commas

On Error GoTo ErrCatcher

'Sheets(Array("Sheet1", "CopyMe2")).Copy

Sheets(Array("Sheet1")).Copy

On Error GoTo 0

' Paste sheets as values

' Remove External Links, Hperlinks and hard-code formulas

' Make sure A1 is selected on all sheets

For Each ws In ActiveWorkbook.Worksheets

ws.Cells.Copy

ws.[A1].PasteSpecial Paste:=xlValues

ws.Cells.Hyperlinks.Delete

Application.CutCopyMode = False

Cells(1, 1).Select

ws.Activate

Next ws

Cells(1, 1).Select

'Remove named ranges except print settings

For Each nm In ActiveWorkbook.Names

If nm.NameLocal <> "Sheet1!Print_Area" And nm.NameLocal <> "Sheet1!Print_Titles" Then

nm.Delete

End If

Next nm

'name report after the branch name

NewName = Left(Range("$B$9").Value, 4)

'Save it in the branch folder of the same name

folder = Dir(destination & NewName & "*", vbDirectory)

ActiveWorkbook.SaveCopyAs destination & folder & "\" & NewName & "_report.xls"

'skip save file confirmation

ActiveWorkbook.Saved = True

ActiveWorkbook.Close SaveChanges:=False

.ScreenUpdating = True

End With

End If

Next i

Exit Sub

ErrCatcher:

MsgBox "Specified sheets do not exist within this workbook"

End Sub

Commenting out portions of code in TI

Commenting out portions of code in TIYou would like to comment out portions of code for legacy or future use instead of removing it. Appending # at the front of every line is ugly and messes up the indenting. Here is a quick, neat and simple fix...

############# PORTION COMMENTED OUT if(1 = 0); #code to comment out is here endif; ####################################

That's it. It can come also handy to turn off auto-generated code in the #****GENERATED STATEMENTS START****.

/!\ I would strongly recommend you add clear markers around the code commented out otherwise it can be very easy for yourself or someone else to overlook the short "if" statement and waste time wondering why the process is not doing anything.

Thanks Paul Simon for the tip.

Creating temporary views

Creating temporary viewswhen creating views from the cube viewer, there is a hard limit to the size of the view displayed. It is 100MB (32bit) or 500MB (64bit) by default, it chan be changed with the MaximumViewSize parameter in the tm1s.cfg

But it is not practical to generate such large views manually.

An alternative is to do it from the Turbo Integrator:

- create a new TI process

- select "TM1 Cube view import"

- click browse

- select the cube

- click create view

from there you can create any view

however it can be more advantageous to create and delete views on-the-fly so your server is cleaner and users will be less confused.

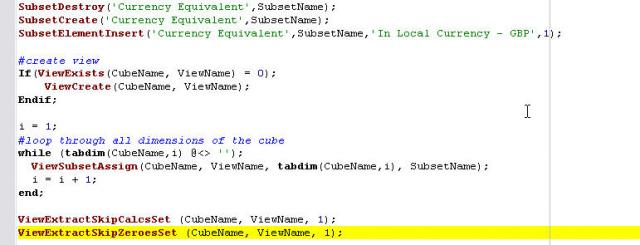

The following code generates a view from the cube 'MyCube' including all elements of the cube.

You need to add some SubsetCreate/SubsetElementInsert in order to limit the view

/!\ remove all consolidations, in most cases they interfere with the import and you will get only a partial import or nothing at all.

--------prolog

CubeName = 'MyCube';

ViewName = 'TIImport';

SubsetName = 'TIImport';

i = 1;

#loop through all dimensions of the cube

while (tabdim(CubeName,i) @<> '');

ThisDim = tabdim(CubeName,i);

If(SubSetExists(ThisDim, SubsetName) = 0);

StringMDX = '{TM1FILTERBYLEVEL( {TM1SUBSETALL( [' | ThisDim | '] )}, 0)}';

#create a subset filtering out all hierarchies

SubsetCreatebyMDX(SubsetName,StringMDX);

EndIf;

i = i + 1;

end;

If(ViewExists(CubeName, ViewName) = 0);

ViewCreate(CubeName, ViewName);

Endif;

i = 1;

#loop through all dimensions of the cube

while (tabdim(CubeName,i) @<> '');

ViewSubsetAssign(CubeName, ViewName, tabdim(CubeName,i), SubsetName);

i = i + 1;

end;

ViewExtractSkipCalcsSet (CubeName, ViewName, 1);

ViewExtractSkipZeroesSet (CubeName, ViewName, 1);

--------------epilog

#cleanup view

ViewDestroy(CubeName, ViewName);

i = 1;

#loop through all dimensions of the cube

while (tabdim(CubeName,i) @<> '');

SubsetDestroy(tabdim(CubeName,i), SubsetName);

i = i + 1;

end;

Debugging

DebuggingAsciiOutput can help tracking what values are being used during execution of your TI processes.

use:

AsciiOutput('\\path\to\debug.cma',var1,var2,NumberToString(var3));

Keep in mind Asciioutput limitations:

- .it is limited to 1024 characters per line

- it can deal only with strings, so you need to apply the NumberToString() function to all numeric variables that you would like to display like var3 in the example above.

- it will open/close the file at every step of the TI. Prolog/Metadata/Data/Epilog that means if you use the same filename to dump your variables in any of these, it will be overwritten by the previous tab process.

Hence you should use different filenames in each tab.

- use DataSourceASCIIQuoteCharacter=''; in the Prolog tab if you want to get rid of the quotes in output.

- use DatasourceASCIIThousandSeparator=''; to remove thousand separators.

- use DatasourceASCIIDelimiter='|'; to change from ',' to '|' as separators

Alternatively you can use ItemReject if the record you step through is rejected, it will then be dumped to the error message

ItemReject(var1|var2);

Use DatasourceASCIIDelimiter to

Dynamic SQL queries with TI parameters

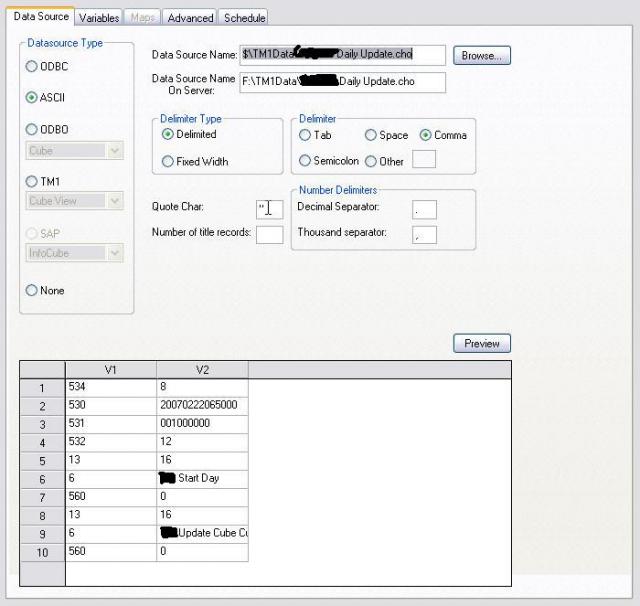

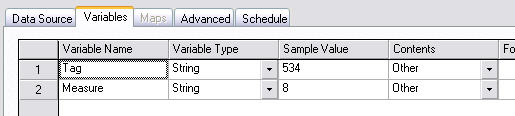

Dynamic SQL queries with TI parametersIt is possible to use parameters in the SQL statement of Turbo Integrator to produce dynamic ODBC queries

Here is how to proceed:

1. create your TI process

2. Advanced->Parameters Tab, insert parameter p0

3. Advanced->Prolog Tab add the processing code to define parameter p0

example: p0 = CellGetS(cube,dim1,dim2...)

4. save the TI process

5. open Data Source, add parameter p0 in WHERE clause

example select * from lib.table where name = '?p0?'

DO NOT CLICK ON THE VARIABLES TAB AT ANY TIME

6. run, answer "keep all variables" when prompted

If you need numeric parameters, there is a twist! numeric parameters do not work! (at least for TM1 9.x)

example: select * from lib.customer where purchase > ?p0? will fail although p0 is defined as a numeric and quotes have been removed accordingly.

But fear not, there is a "simple" workaround

1. proceed as above up to step 5

2. Advanced->Prolog tab, at the bottom: strp0 = NumberToString(p0);

3. Data Source tab, in the SQL statement replace ?p0? with CAST('?strp0?' as int)

example: select * from lib.customer where purchase > ?p0?

becomes select * from lib.customer where purchase > CAST('?strp0?' as int)

clicking the preview button will not show anything but the process will work as you can verify by placing an asciioutput in the Advanced->Data tab.

The CAST function is standard SQL so that should be working for any type of SQL server.

Note from Norman Bobo:

It is not necessary to add the "CAST" function to the SQL to pass numeric values. The trick is to think of the parameter as a substitution string, not a value. Define the parameter as a string and insert it into the SQL embedded in ?'s. When setting the value in the Advanced Prolog tab, convert the numeric value you would like to use into a string value. That value will be simply substituted into the SQL statement directly, as if you typed the value into the SQL yourself.

For instance:

In the Advanced/Prolog tab:

strFY = NUMBERTOSTRING(CELLGETN('Control Cube','FYElem','ControlValue');

Parameter:

Name: strFY, datatype: string, Default value: 2010, Prompt: Enter the FY:

In the SQL:

...

where FY = ?strFY?

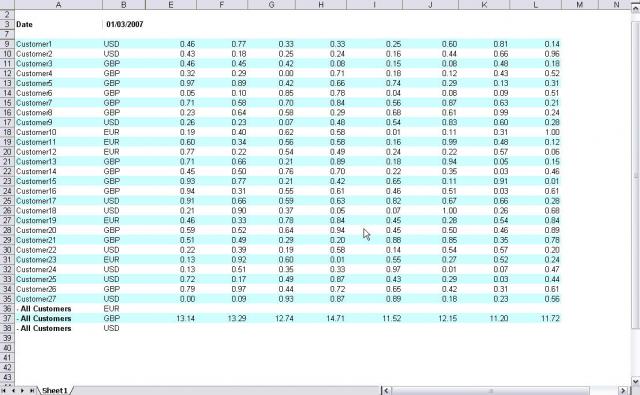

Dynamic formatting

Dynamic formattingIt is possible to preformat dynamic slices by using the Edit Element Formats button in the subset editor. However that formatting is static and will not apply to new elements of a slowly changing dimension. Also it takes a long time to load/save when you try to apply it to more than a few dozen elements.

As an example, we will demonstrate how to dynamically alternate row colors in a TM1 report for a Customer dimension.

.Open subset editor for the Customer dimension

.Select some elements and click "Edit Elements Format"

.the "Edit Element Formats" worksheet opens, just click Save as "colored row"

this creates the }DimensionFormatStyles_Customer dimension and the }DimensionFormats_Customer cube.

Now we can modify this cube with our rules.

open the rules editor for the }DimensionFormats_Customer cube, add this:

#alternate row colors for all elements

['colored row','Cond1Type'] = S: '2';

['colored row','Cond1Formula1'] = S: '=MOD(ROW(),2)=1';

['colored row','Cond1InteriorColorIndex'] = S: '34';

['colored row','Cond2Type'] = S: '2';

['colored row','Cond2Formula1'] = S: '=MOD(ROW(),2)=0';

['colored row','Cond2InteriorColorIndex'] = S: '2';

now slice a view with the Customer dimension, applying that "colored row" style.

result:

To create a different style:

- Edit one element from the "Edit element format", apply the desired formatting and save

- Note the new values of the measures in the }DimensionFormats_Customer cube for that element

- Reflect these changes in the rules to apply to all elements for that style

Padding zeroes

Padding zeroesThis a short simple syntax to easily pad zeroes to an index value:

Period = 'Period ' | NumberToStringEx(index, '00','','');

(from olap forums)

alternatively, one could also write (prior to TM1 8.2):

Period = 'Period ' | If(index > 10,'0','') | NumberToString(index);

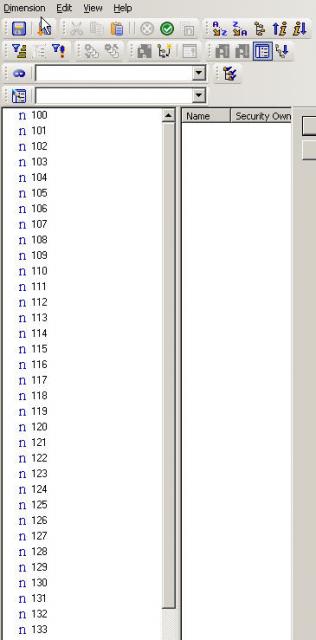

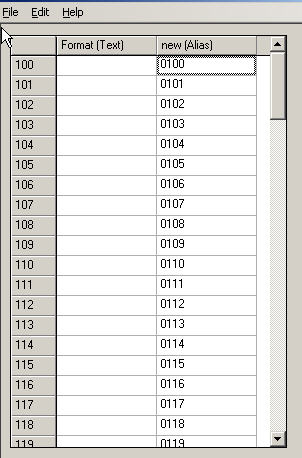

Renaming elements

Renaming elementsRenaming elements without activating aliases? Yes we can!

The dimension editor and dimension worksheets cannot rename elements directly, so let me introduce you to SwapAliasWithPrincipalName.

In this example, we will add a padding "0" to an existing set of elements without rebuilding the dimension from scratch.

Before swap:

- Create a new alias "new" in the Dimension, by default the new elements are identical

- Change all required elements to their new names in that alias. Below we pad a zero in front of all elements

Now create a new TI with the following line in the Prolog Tab:

SwapAliasWithPrincipalName(Dimension,'new',0);

The third parameter needs to be zero to execute the swap. If you know if it has any purpose, please leave a comment to enlighten us.

After swap:

And the "old" elements have become the "new" alias:

/!\Make sure the associated dimension worksheet is also updated if there is one!!!

This was tested successfully under v. 9.0.3 and v. 9.4.

This TI function is listed in the TM1 documentation, however there is no description of its function and syntax.

Click on the RTFM FAIL tag to find out some other poorly documented or simply undocumented TM1 functions.

Rules

RulesDimension elements containing a single quote character (') require a double quote to be interpreted correctly by the rules engine.

Example: ['department's'] -> ['department''s']

One could use aliases without single quote characters too, however the rule would break if anything were to happen to these aliases. So it is best practice not to use single quote characters in your elements in the first place and if you really need them, then use the double quote in the rules.

After modifying cells through rules, the consolidations of these cells won't match the new values.

To reconciliate consolidations add in your rules:

['Total'] = ConsolidateChildren('dimension')

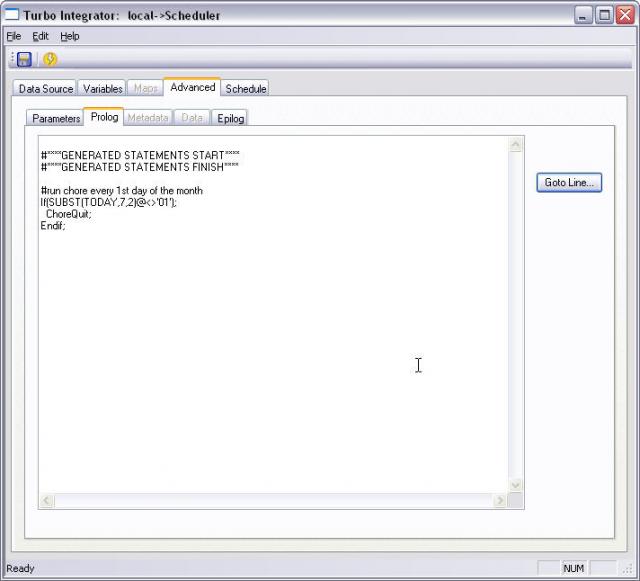

Scheduling chores on calendar events

Scheduling chores on calendar eventsRunning chores on a specific day of the month

Scheduling chores in TM1 can be frustrating as it does not offer to run on specific dates or other types of events. The following article explains how to create chores schedules as flexible as you need them to be.

From the Server Explorer

- Create a new process

- Click Advanced ->Prolog tab

- Add this code:

#run chore every 1st day of the month If(SUBST(TODAY,7,2)@<>'01'); ChoreQuit; Endif;

- Save the process as "Scheduler"

- Create a new chore

- Select "Scheduler" and the other process(es) that you need to run

- It is important to put the "Scheduler" process first in the list

- Set it to run once a day

You are now set: that chore will run every first day of the month.

The ChoreQuit command will abort the "Scheduler" process and the subsequent processes in the chore list if today's date is not "01" i.e. the first day of the month.

From the above code you see that you can actually get your chore to run based on any sort of event, all you need is to change the conditional statement to the desired event.

Running chores on specific days of the week

DayOfWeek= Mod ( DayNo( TODAY ) + 21915, 7);

# 0 = Sunday, 1 = Monday to 6 = Saturday.

If( DayOfWeek = 0 % DayOfWeek > 4 );

ChoreQuit;

Send email/attachments

Send email/attachmentsIt is possible to send email alerts or reports as attachments from Turbo Integrator. This can be achieved by executing a VB script.

- Save the attached VB script on your TM1 server

- Create a TI process

- In the Epilog tab, add the following code:

S_Run='cmd /c D:\path\to\SendMail.vbs smtp.mycompany.com 25 tm1@mycompany.com me@mycompany.com "Today report" "check it out" E:\TM1Reports\todaysreport.xls'

ExecuteCommand(S_Run,0);

The syntax is:

SendMail.vbs server port sender destination subject body attachment

so replace the fields as required to suit your setup

/!\ The DOS command line is limited to 255 characters so avoid putting too much information in the body.

/!\ If a field contains a blank space you must enclose that field in quotes so the script gets the correct parameters

code from kc_kang (applix forum) available at https://web.archive.org/web/20120620123310/http://www.rondebruin.nl/cdo.htm

Silent failures

Silent failuresTM1 processes will not complain when their input source is empty.

So although the "process successful" or "chore successful" message will popup, your cube will remain desperately empty.

In order to solve that silent bug (or rather "feature" in IBM's eyes), you will need to add specific code to your TI processes to test against empty sources.

Here follows:

.initialise counter

PROLOG TAB

SLineCount = 0; #****GENERATED STATEMENTS START****

.increment counter

DATA TAB

SLineCount = SLineCount + 1; #****GENERATED STATEMENTS START****

.check counter value at the end and take appropriate action

EPILOG TAB

if(SLineCount = 0);

ItemReject('input source ' | DataSourceType | ' ' | DataSourceNameForServer | ' is empty!');

endif;

#****GENERATED STATEMENTS START****

ItemReject will send the error to the msg log and the execution status box will signal a minor error.

TM1 lint

TM1 lintalias.pl is a very basic lint tool whose task is to flag "dangerous" TM1 rules.

For now it is only checking for aliases in rules.

Indeed, if an alias is changed or deleted, any rule based on that alias will stop working without any warning from the system. The values will remain in place until the cube or its rules gets reloaded but you will only get a "silent" warning in the messages log after reloading the cube.

How to proceed:

.configure and execute the following TI process (to put in prolog), this will generate a list of all cubes and associated dimensions, and a dictionary of all aliases on the system in .csv format

### PROLOG

#configure the 2 lines below for your system

report = 'D:\alias.dictionary.csv';

report1 = 'D:\cubes.csv';

#

##############################################

#it is assumed that none of the aliases contain commas

DataSourceASCIIQuoteCharacter = '';

######create a list of all cubes and associated dimensions

c = 1;

while(c <= DimSiz('}Cubes'));

cube = DIMNM('}Cubes',c);

d = 1;

while(tabdim(cube,d) @<> '');

asciioutput(report1, cube, tabdim(cube, d));

d = d + 1;

end;

c = c + 1;

end;

######create a dictionary of all aliases

d = 1;

while(d <= DimSiz('}Dimensions'));

dim = DIMNM('}Dimensions',d);

#skip control dimensions

If(SUBST(dim,1,1) @<> '}');

attributes = '}ElementAttributes_' | dim;

#any aliases?

If(DIMIX('}Dimensions',attributes) <> 0);

a = 1;

while(a <= DimSiz(attributes));

attr = DIMNM(attributes,a);

#is it an alias?

If(DTYPE(attributes,attr) @= 'AA');

#go through all elements and report the ones different from the principal name

e = 1;

while(e <= DimSiz(dim));

element = DIMNM(dim,e);

If(element @<> ATTRS(dim,element,attr) & ATTRS(dim,element,attr) @<> '');

asciioutput(report,dim,attr,ATTRS(dim,element,attr));

Endif;

e = e + 1;

end;

Endif;

a = a + 1;

end;

Endif;

Endif;

d = d + 1;

end;

.configure and execute the perl script attached below

that script will load the csv files generated earlier in hash tables, scan all rules files and finally report any aliases.

If the element is ambiguous because it is present in 2 different dimensions then you should write it as dimension:'element' instead of using aliases (e.g. write Account:'71010' instead of '71010').

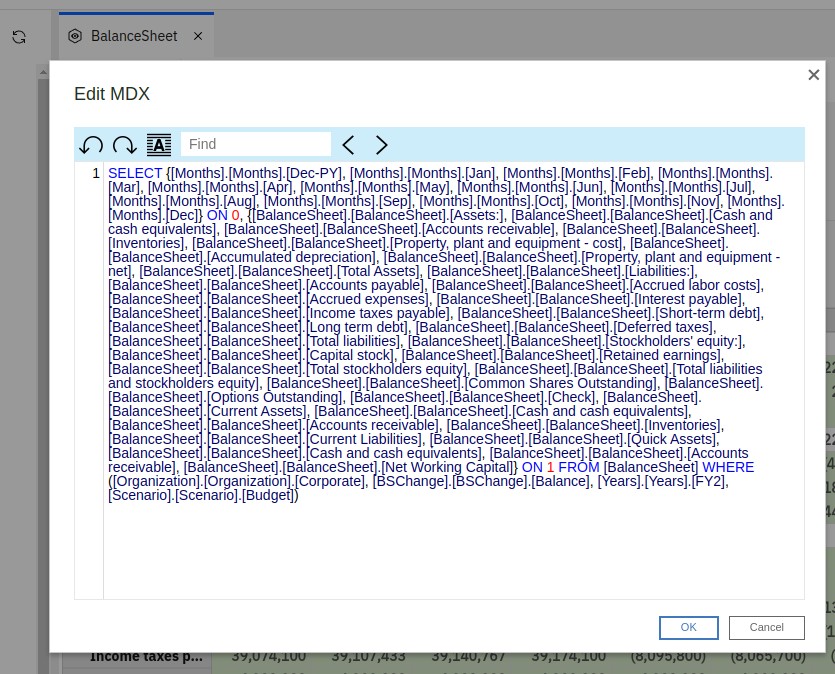

Pimp my PAW

Pimp my PAW

I'm in the PAW Workbench, I open a view and I click on the "Edit MDX" button. I get a packed MDX block, with no indentation whatsoever, pretty hard to read. If only there was an option to format that MDX block to something much easier to read.....

**RING** **RING** Oh, I'm sorry, I must interrupt this blogpost, someone is at the door.

Please hold on.

- I'm sorry, who are you?

- Yo dawg! I'm Xzibit!

- Wait! What? What are you doing on this blog?

- Yo dawg I herd you like APIs so we put an API in yo API so you can MDX while you MDX.

- Is this a joke? This does not make any sense!

- It will make sense, buckle up yo.

This is going to be the setup:

chromium <---mitmproxy---> PAW server

- chromium opens the MDX Editor, that triggers a POST /api/v1/MDX call to the PAW server

- mitmproxy catches the json response from the PAW server

- a python script modifies that json data on-the-fly according to our needs

- mitmproxy hands over the modified data to chromium

- finally chromium displays the reformatted MDX expression

The whole process is completely transparent to the client and the server. We are inserting ourselves in the middle of an SSL encrypted connection, it will require installing a fake Certificate Authority to keep both client and server happy. And I don't like clicking around, so we will prepare that setup from the commandline.

First, we install the mdx-beautify.py script that mitmproxy will be using to process the PAW server responses.

mkdir ~/.mitmproxy && cat > ~/.mitmproxy/mdx-beautify.py << EOF

from sql_formatter.core import format_sql

from mitmproxy import http

import json

def response(flow: http.HTTPFlow) -> None:

#intercept MDX API responses

if flow.request.pretty_url.endswith("/api/v1/MDX"):

content = json.loads(flow.response.content)

mdx_pretty = format_sql(content['Mdx'])

# inject the expression back in the flow

content['Mdx'] = mdx_pretty

flow.response.content = bytes(json.dumps(content),"UTF-8")

EOF

#

Before installing the mitmproxy/mitmproxy docker image, we need to extend that docker image with the python package "sql formatter". sql formatter is not ideal to format MDX, but that will be good enough for the sake of that proof of concept:

docker build --tag mitmproxy-sqlfmt - << EOF FROM mitmproxy/mitmproxy RUN pip install sql_formatter EOF #

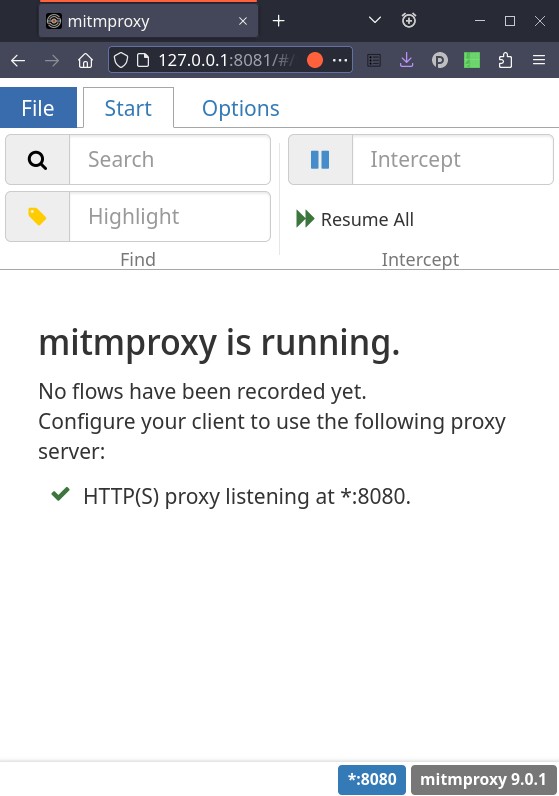

Now run that new build with the mdx-beautify.py script and a localhost web interface on port 8081 to display what goes through the proxy:

docker run --rm -it -p 8080:8080 -p 127.0.0.1:8081:8081 -v ~/.mitmproxy:/home/mitmproxy/.mitmproxy mitmproxy-sqlfmt mitmweb -s ~/.mitmproxy/mdx-beautify.py --web-host 0.0.0.0

On its first run, the container generates all the necessary certificates in ~/.mitmproxy .

With a browser, navigate to http://127.0.0.1:8081 this is mitmweb interface that allows you to see all the exchanges between the client and PAW server. We will not use that browser to access the PAW server, this is only to watch what's happening in the background.

As it is suggested on that screenshot, we will now configure a chromium client to use that proxy.

But first, a word of warning, installing fake CAs is messy, and it creates a huge security vulnerability in your browser, anybody in possession of the CA keys could read the traffic from your browser. So, we are going to sandbox chromium with firejail in order to keep that setup isolated in a dedicated directory ~/jail and that will be much easier to clean up afterwards.

We initialise the certificates database in the jail with certutil from mozilla-nss-tools and populate it with the newly generated mitmproxy certificate. That will spare us from messing with the browser settings at chrome://settings/certificates

mkdir -p ~/jail/.pki/nssdb && certutil -d sql:$HOME/jail/.pki/nssdb -A -t "CT,c,c" -n mitmproxy -i ~/.mitmproxy/mitmproxy-ca-cert.pem

We launch the sandboxed chromium in firejail with the preconfigured proxy address

firejail --env=https_proxy=127.0.0.1:8080 --private=~/jail chromium

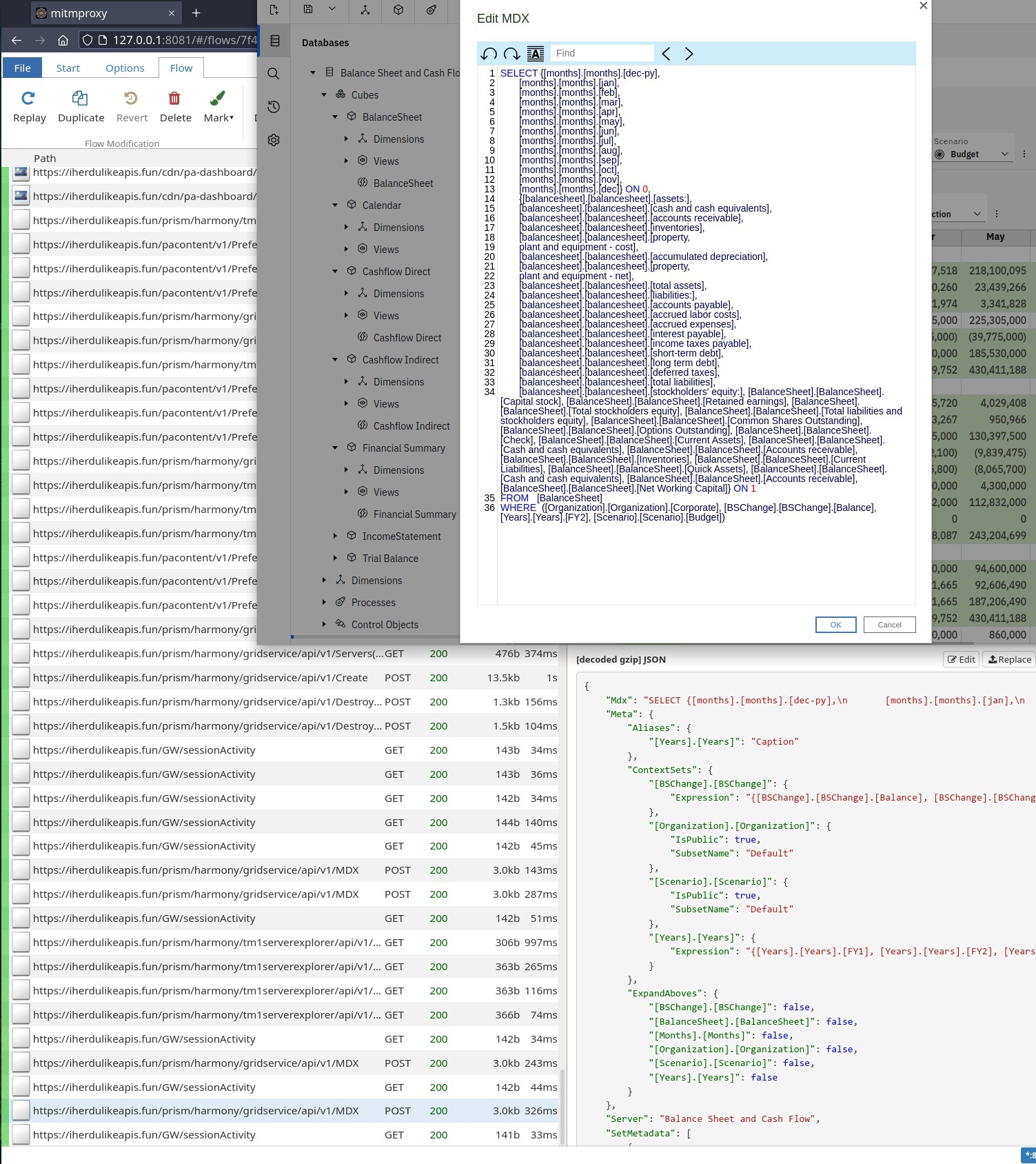

With this jailed chromium, navigate to the URL where your PAW server is located, log in, open the workbench and open some cube view, as you normally would.

Now, you see on the screenshot below, on the bottom left, clicking on the "Edit MDX" button resulted in a POST /api/v1/MDX call to the PAW server. On the right, in the response from the server, the value for the key "Mdx" is correctly showing "\n" and space indentations. And finally, the "Edit MDX" window is showing the MDX expression almost formatted to our liking.

- OK! But this is definitely not something that I would deploy to users.

- Right yo! This setup is only intended for educational purposes, and it is about as useful as print "hello world!". But It gives you a different approach to dig in the PAW API, and a way to inject it with python code through the mitmproxy scripting API.

- Thanks Xzibit!

TM1 operators

TM1 operatorsLogical operators

& AND

% OR

~ NOT

Strings operators

| concatenate

@= string1 equals string2

@<> string1 differs from string2

inserting '&' in strings:

ItemReject('This & That'); will return 'This _That'

ItemReject('This && That'); will return 'This & That'

Creating Dynamic Subsets in Applix TM1 with MDX - A Primer

Creating Dynamic Subsets in Applix TM1 with MDX - A PrimerLead author: Philip Bichard.

Additional Material: Martin Findon.

About This Document

This MDX Primer is intended to serve as a simple introduction to creating dynamic dimension subsets using MDX in TM1. It focuses on giving working examples rather than trying to explain the complete theory of MDX and makes sure to cover the features most useful to TM1 users.

TM1 currently (as of 9.0 SP3) only allows users to use MDX to create dimension subsets and not to define cube views. This means that the usage of MDX in TM1 is often quite different in terms of both syntax and intention from examples found in books and on the internet.

As MDX (Multi-Dimensional eXpressions) is an industry-standard query language for OLAP databases from Microsoft there are many references and examples to be found on the Internet, though bear in mind that TM1 doesn’t support every aspect of the language and adds a few unique features of its own. This can make it difficult to use examples found on the web, whereas all the examples in this document can simply be copied-and-pasted into TM1 and will execute without modification, assuming you have the example mini-model created as documented later.

Full document as just one HTML page here.

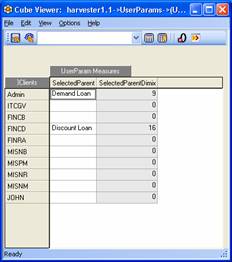

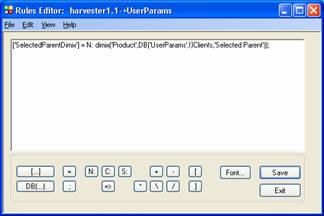

What is a MDX-based dynamic subset in TM1?

What is a MDX-based dynamic subset in TM1?A dynamic subset is one which is not a fixed, static, list but instead it is based on a query which is re-evaluated every time the subset is used. In fact, MDX could be used to create a static subset and an example is shown below, but this unlikely to be useful or common.

Some examples of useful dynamic subsets might be a list of all base-level products; a list of our Top 10 customers by gross margin; a list of overdue supply shipments; all cost centres who have not yet submitted their budget. The point is, these lists (subsets) may vary from second to second based on the structure or data in TM1. For example, as soon as a new branch is added to Europe, the European Branches subset will immediately contain this new branch, without any manual intervention needed.

MDX is the query language used to define these subsets. MDX is an industry-standard query language for multi-dimensional databases like TM1, although TM1 only supports a certain subset (excuse the pun) of the entire language and adds in a few unique features of its own as well. When you define a subset using MDX instead of a standard subset, TM1 stores this definition rather than the resulting set. This means the definition – or query – is re-run every time you look at it – without the user or administrator needing to do anything at all. If the database has changed in some way then you may get different results from the last time you used it. For example, if a subset is defined as being “the children of West Coast Branches” and this initially returns “Oakland, San Francisco, San Diego” when it is first defined, it may later return “Oakland, San Francisco, San Diego, Los Angeles” once LA has been added into the dimension as a child of West Coast Branches. This is what we mean by “dynamic” – the result changes. Another reason that can cause the subset to change is when it is based on the values within a cube or attribute. Every day in the newspaper the biggest stock market movers are listed, such as a top 10 in terms of share price rise. In a TM1 model this would be a subset looking at a share price change measure and clearly would be likely to return a different set of 10 members every day. The best part is that the subset will update its results automatically without any work needed on the part of a user.

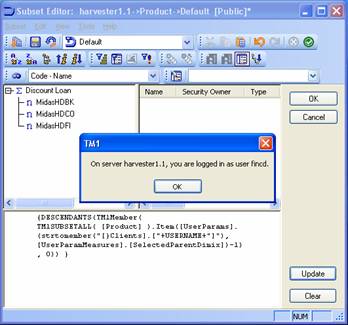

How to create a MDX-based subset in TM1

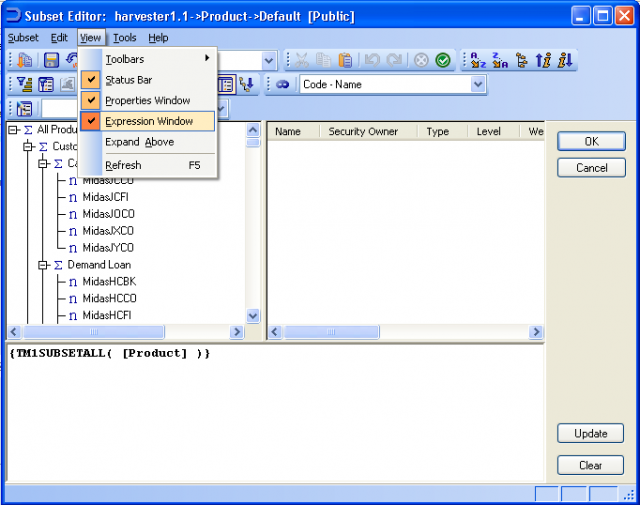

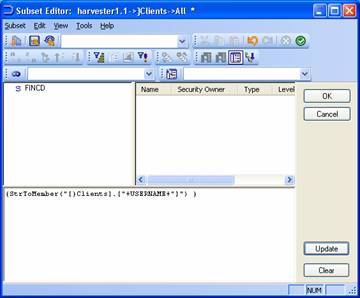

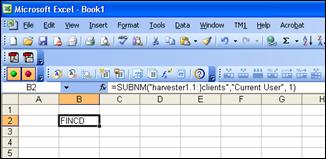

How to create a MDX-based subset in TM1The same basic steps can be followed with all the examples in this document. Generally the examples can be copy-and-pasted into the Expression Window of the Subset Editor of the dimension in question – often Product. Note that it is irrelevant which cube the dimension is being used by; you will get same results whether you open the dimension Subset Editor from within a cube view, the cube tree in Server Explorer or the dimension tree in Server Explorer.

In order to view and edit an MDX query you must be able to see the Expression Window in the Subset Editor. To toggle this window on and off choose View / Expression Window.

You can now just type (or paste) your query into this Expression Windows and press the Update button to see the results.

How to create a static subset with MDX

How to create a static subset with MDXA static subset is one which never varies in its content.

This query will return the same 3 members (Discount Loan, Term Loan and Retail) every time.

{ [Product].[Discount Loan], [Product].[Term Loan], [Product].[Retail] }

Don’t worry, it gets more exciting from here.

How to create a dynamic subset with MDX

How to create a dynamic subset with MDXTM1 only supports a certain number of functions from the complete MDX specification. Different versions of TM1 will support different functions (and potentially support the in different ways). The valid set of functions for the version of TM1 that you are using can be found in the main Help file, under Reference Material / MDX Function Support. Before trying to write a new query, make sure it is supported, and although some unlisted functions certainly do work they must be used at your own risk. The standard error message which means the function is genuinely not supported by your version of TM1 is, "Failed to compile expression".

One word of warning: by its very nature, the results of a dynamic subset can change. When including dynamic subsets in views, processes, SUBNM functions, and so forth, consider carefully what the potential future results might be, especially if the subset could one day be empty.

The two most common methods to go about actually creating a dynamic subset are to create them by hand or using TurboIntegrator.

By hand. You can either type (or paste) a query into the Expression Window as explained earlier, or you can choose Tools / Record Expression (and then Stop Recording when done) to turn on a kind of video recorder. You can then use the normal features of the subset editor (e.g. select by level, sort descending, etc.) and this recorder will turn your actions into a valid MDX expression. This is a great way to see some examples of valid syntax, especially for more complex queries.

When you have been recording an expression and choose Stop Recording TM1 will ask you to confirm if you wish to attach the expression with the subset - make sure to say ‘Yes’ and tick the ‘Save Expression’ checkbox when saving the resulting subset, otherwise only a static list of the result is saved, not the dynamic query itself.

Using TurboIntegrator. Only one line, using SubsetCreateByMDX, is needed to create and define the subset. You will need to know what query you want as the definition already. Note that the query can be built up in the TI script using text concatenation so can incorporate variables from your script and allow long queries to be built up in stages which are easier to read and maintain.

SubsetCreatebyMDX('Base Products','{TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )}, 0)}');

All TI-created MDX subsets are saved as dynamic MDX queries automatically and not as a static list.

Note that, at least up to TM1 v9.0 SP3, MDX-based subsets cannot be destroyed (SubsetDestroy) if they are being used by a public view, and they cannot be recreated by using a second SubsetCreateByMDX command. Therefore it is difficult to amend MDX-based subsets using TI. While the dynamic nature of the subset definition may make it somewhat unlikely you will actually want to do this, it is important to bear in mind. If you need to change some aspect of the query (e.g. a TM1FilterByPattern from “2006-12*” to “2007-01*” you may have to define the query to use external parameters, as documented in this document. This will have a small performance impact over the simpler hardcoded version.

Also, filter against values of a cube with SubsetCreateByMDX in the Epilog tab e.g. {FILTER({TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},[Test].([Posting Measures].[Amount]) > 0 )} will not work if the values happen to have been loaded in the Data tab. You need to execute the SubsetCreateByMDX command in a subsequent TI process.

Note that TI has a limit of 256 characters for defining MDX subsets, at least up to v9.1 SP3, which can be quite limiting.

Syntax and Layout

Syntax and LayoutA query can be broken over multiple lines to make it more readable. For example:

{

FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

Test2.([Rate Measures].[Rate]) = 19

)

}

is more readable than having the whole query in one line. The actual filter section is more easily read and modified now by having it on a line by itself.

Note that references to members usually have the dimension name as a prefix. For example,

{ {[Product].[Retail].Children} }

In fact the dimension name is optional – but only if the member name (Retail in this case) is completely unique within the entire server - i.e. there are no cubes, dimensions or members with that exact name. For example this is the same query with the dimension name omitted:

{ {[Retail].Children} }

Which would work in the context of the sample application used by this document but would be risky in a real-world application. The error message received when forgetting to specify the prefix would be something like, “Level or member name “[Retail]” ambiguous: found in dimensions…” and then it goes on to list the various dimensions in which the non-unique member name can be found, which is very helpful. It is therefore certainly safest and most performant to always use the dimension prefix.

The use of square brackets can sometimes seem a bit arbitrary when reading examples of MDX queries. The fact is that an OLAP object name (e.g. cube name, dimension name, member name) must be enclosed in square brackets only if it contains a space, starts with a number or is an MDX reserved word (e.g. Select). However, sometimes it can be simpler to decide to always use brackets so similar queries can be compared side by side more easily.

The exact definition of a member in TM1 is almost always expressed as [Dimension Name].[Member Name] and no more. In other products that also use MDX as a query language (such as Microsoft Analysis Services) you may notice that queries specific the full ‘path’ from the dimension name through the hierarchy down to the member name, for example:

[Date].[2009].[Q1].[Feb].[Week 06]

This can also be written as [Date].[2009^Q1^Feb^Week 06]

The reason for this is that other products may not require every member name to be unique since each member has a context (it’s family) to enable it be uniquely identified, which is why they need to know exactly which Week 06 is required since there may be others (in 2008 for instance in the above example). TM1 requires all member names, at any level (and within Aliases) to be completely unique within that dimension. TM1 would need you to make Q1, Feb and Week 06 more explicit in the first place (i.e. Q1 2009, Feb 2009, Week 06 2009) but you can then just refer to [Date].[Week 06 2009].

Finally, case (i.e. capital letters versus lower case) is not important with MDX commands (e.g. Filter or FILTER, TOPCOUNT or TopCount are all fine) but again you may prefer to adopt just one style as standard to make it easier to read.

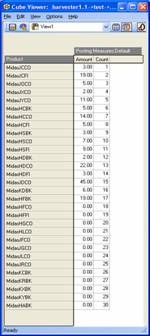

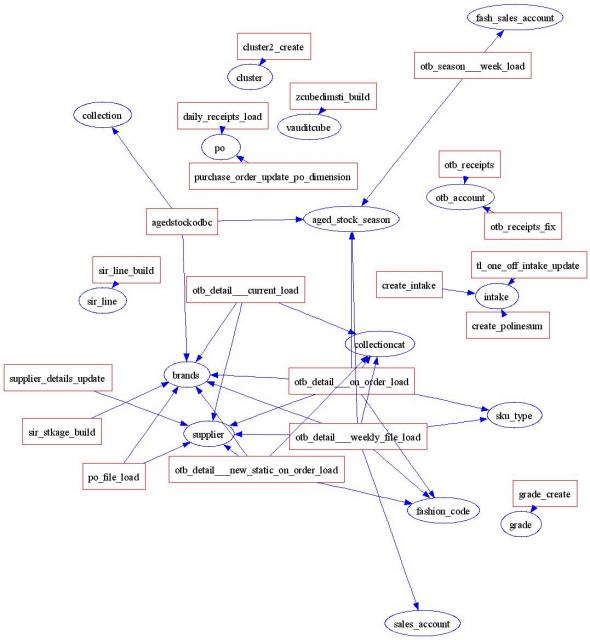

The example model used

The example model usedIn this document many examples of dynamic queries will be given. They all work (exactly as written, just copy-and-paste them into the Expression Window in the Subset Editor of the appropriate dimension to use them) on the simple set of cubes and dimensions shown below. The model is deliberately simple with no special characteristics so you should find it easy to transfer the work to your own model.

The model used included 1 main dimension, Product, on which the vast majority of the queries works plus 3 cubes: Test, Test2 and Test3. The data values in the cubes will vary during testing (you’ll want to tweak the values and re-run the query to make sure the results change and are correct) but the screenshots below show the cube and dimension structures well enough for you to quickly recreate them or how to use your own model instead. To simplify the distribution of this document there is no intention to also distribute the actual TM1 model files. Note that the main dimension used, Product, featured ragged, and multiple, hierarchies.

TM1SubsetAll, Members, member range

TM1SubsetAll, Members, member rangeThe basis for many queries, this returns (almost, see below) the entire dimension, which is the equivalent of clicking the ‘All’ button in the Subset Editor.

TM1SUBSETALL( [Product] )

Note that only the final instance in the first hierarchy of members that are consolidated multiple times is returned.

The Members function, on the other hand, delivers the full dimension, duplicates included:

[Product].Members

A range of contiguous members from the same level can be selected by specifying the first and last member of the set you require with a colon between them.

This example returns Jan 1st through to Jan 12th 1972.

{[Date].[1972-01-01]:[Date].[1972-01-12]}

Select by Level, Regular Expression (Pattern) and Ordinal

Select by Level, Regular Expression (Pattern) and OrdinalSelecting members based on their level in the dimension hierarchy (TM1FilterByLevel) or by a pattern of strings in their name (TM1FilterByPattern) can be seen easily by using the Record Expression feature in the subset editor.

The classic “all leaf members” query using TM1’s level filtering command TM1FilterByLevel:

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}

Select all the leaf members which match the wildcard ‘*HC??’ – i.e. that have H and C as the third and fourth characters from the end of their name.

{TM1FILTERBYPATTERN( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, "*HC??")}

The reason that these functions start with “TM1” is that they are not standard MDX commands and are unique to TM1. There are two main reasons why Applix will implement such unique functions: to add a feature that is present in “standard” TM1 and users will miss if it is not there; or because “standard” TM1 has the same feature as MDX but has historically implemented it slightly differently to MDX and therefore would, again, cause users problems if it was only implemented in the standard MDX way.

In these two cases, TM1FilterByPattern brings in a function commonly used by TM1 users that is lacking in MDX, while TM1FilterByLevel exists because TM1 has, since its launch in 1984, numbered consolidation levels starting at zero for the leaf level rising up the levels to the total members, while Microsoft decided to do it the exact opposite way.

In certain situations it is useful to use the standard MDX levels method and this is also available with the Levels function. It allows you return the members of a dimension that reside at the same level as a named member, just bear in mind that standard MDX orders the levels in terms of their distance from the top of the hierarchy and not the bottom as TM1.

This example returns all the members at the same level as the Retail member:

{ {[Product].[Retail].Level.Members} }

Which, although Retail is a high level consolidation, returns an N: item (Product Not Applicable) in the dimension because this rolls straight up into All Products as does Retail so they are considered to be at the same level.

To filter the dimension based on a level number you need to use the .Ordinal function. This is not documented as being supported in the Help file, and did not work in 8.2.7, but appears to work in 9.0 SP3 and 9.1.1.36 at least.

This example returns all the members at Level 1:

{Filter( {TM1SUBSETALL( [Product] )}, [Product].CurrentMember.Level.Ordinal = 1)}

This example would return all members not at the same level as Discount Loan.

{Filter( {TM1SUBSETALL( [Product] )}, [Product].CurrentMember.Level.Ordinal <> [Product].[Discount Loan].Level.Ordinal)}

TM1Sort, TM1SortByIndex and Order

TM1Sort, TM1SortByIndex and OrderTM1Sort is the equivalent of pressing one of the two Sort Ascending or Sort Descending buttons in the subset editor – i.e. sort alphabetically.

TM1SortIndex is the equivalent of pressing one of the two Sort by index, ascending or Sort by index, descending buttons in the subset editor – i.e. sort by the dimension index (dimix).

Order is a standard MDX function that uses a data value from a cube to perform the sort. For example, sort the list of customers according to the sales, or a list of employees according to their length of service.

Sort the whole Product dimension in alphabetically ascending order.

{TM1SORT( {TM1SUBSETALL( [Product] )}, ASC)}

Or, more usefully, just the leaf members:

{TM1SORT( TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0), ASC)}

Sort the leaf members according to their dimix:

{TM1SORTBYINDEX( TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0), ASC)}

Sort the leaf members of the dimension according to their Amount values in the Test cube from highest downwards.

{

ORDER(

{ TM1FILTERBYLEVEL(

{TM1SUBSETALL( [Product] )}

,0)}

, [Test].([Posting Measures].[Amount]), BDESC)

}

Note that using BDESC instead of DESC gives radically different results. This is because BDESC treats all the members across the set used (in this case the whole dimension) as being equal siblings and ranks them accordingly, while DESC treats the members as still being in their “family groups” and ranks them only against their own “direct” siblings. If you’re not sure what this means and can’t see the difference when you try it out, then just use BDESC!

Order can also use an attribute instead of a cube value. In this example the AlternateSort attribute of Product is used to sort the children of Demand Loan in descending order. It is a numeric attribute containing integers (i.e. 1, 2, 3, 4, etc) to allow a completely dynamic sort order to be defined:

{ ORDER( {[Demand Loan].Children}, [Product].[AlternateSort], DESC) }

TopCount and BottomCount

TopCount and BottomCountA classic Top 10 command:

{ TOPCOUNT( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, 10, [Test].([Posting Measures].[Amount]) )}

By omitting a sort order it sorts in the default order (which has the values descending in value and breaks any hierarchies present).

A Top 10 query with an explicit sort order for the results.

{ ORDER( {TOPCOUNT( {TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0)}, 10, [test].([Posting Measures].[Amount]))}, [test].([Posting Measures].[Amount]), BDESC) }

BDESC means to “break” the hierarchy.

Note how the chosen measure is repeated for the sort order. Although the same measure is used in the sample above you could actually find the top 10 products by sales but then display them in the order of, say, units sold or a ‘Strategic Importance’ attribute.

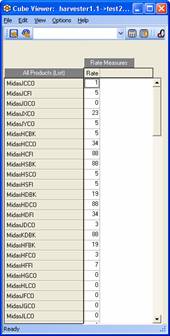

This is the top 10 products based on Test2's Rate values, not ordered so will be sorted according to the values in Test2.

{TOPCOUNT( {TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0)}, 10, [Test2].([Rate Measures].[Rate]))}

This is the top 10 products based on test2's data in the Rate measure, ordered from 10 through 1.

{ORDER( {TOPCOUNT( {TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0)}, 10, [test2].([Rate Measures].[Rate]))}, [test2].([Rate Measures].[Rate]), ASC)}

TopCount automatically does a descending sort by value to get the TOP members. If this is not desired, you might want to use the Head function (detailed below) instead.

BottomCount is the opposite of TopCount and so is used to find the members with the lowest values in a cube. Beware that the lowest value is often zero and if that value needs to be excluded from the query you will need to refer to the section on the Filter function later in this document.

A Bottom 10 query with an explicit sort order for the results.

{ ORDER( {BOTTOMCOUNT( {TM1FILTERBYLEVEL({TM1SUBSETALL( [Product] )},0)}, 10, [test].([Posting Measures].[Amount]))}, [test].([Posting Measures].[Amount]), BASC) }

Further reading: TopSum, TopPercent and their Bottom equivalents are useful related functions.

Filter, by values, strings and attributes

Filter, by values, strings and attributesThe FILTER function is used to filter the dimension based on some kind of data values rather than just the members and their hierarchy on their own. This data might be cube data (numeric or string) or attribute data. This requires a change of thinking from straightforward single dimensions (lists with a hierarchy and occasionally some attributes) to a multi-dimensional space, where every dimension in these cubes must be considered and dealt with.

This example returns the leaf members of Product that have an Amount value in the Test cube above zero.

{FILTER({TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Posting Measures].[Amount]) > 0 )}

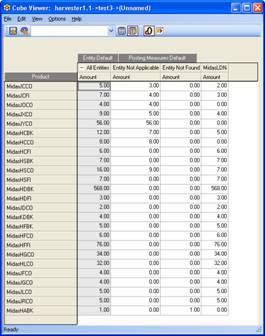

Since the Test cube only has 2 dimensions – Product and Posting Measures this is a simplistic example. Most cubes will have more than just the dimension being filtered and the dimension with the filter value in. However, it is simple to extend the first example to work in a larger cube.

This example returns the leaf members of Product that have an Amount value for All Entities in the Test3 cube above zero.

{FILTER({TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test3].([Entity].[All Entities],[Posting Measures].[Amount]) > 0 )}

As you can see from the above, simply include all the requisite dimension references inside the round brackets. Usually you will just need a specific named member (e.g. ‘All Entities’). If the dimension is omitted then the CurrentMember is used instead which is similar to using !dimension (i.e. “for each”) in a TM1 rule, and could return different results at a different speed.

Instead of just using a hardcoded value to filter against (zeroes in the examples above), this example returns all products with an amount in the Test cube greater than or equal to the value in the cell [MidasJCFI, Amount].

{FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Posting Measures].[Amount]) >=

[Test].([Product].[MidasJCFI],[Posting Measures].[Amount])

)}

This query returns the products that have a Rate value in Test2 greater than MidasJXCO's Rate in Test2. Now, this query just returns a set of products – it’s up to you which cube you display these products in – i.e. you can run this while browsing Test and therefore return what looks like an almost random set of products but the fact is that the query is filtering the list of products based on data held in Test2. This may not immediately appear to be useful but actually it is, and can be extremely useful – for example display the current year’s sales for products that were last year’s worst performers. If the data for two years was held in different cubes then this would be exact same situation as this example. There are often many potential uses for displaying a filtered/focused set of data in Cube B that is actually filtered based on data in Cube A.

{FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Posting Measures].[Amount]) >=

[Test2].([Product].[MidasJXCO],[Rate Measures].[Rate])

)}

As detailed elsewhere, Tail returns the final member(s) of a set. An example of when it is handy when used with Filter would be for finding the last day in a month where a certain product was sold. The simple example below initially filters Product to return only those with an All Entity Amount > 0, and then uses tail to return the final Product in that list.

{TAIL( FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test3].( [Entity].[All Entities], [Posting Measures].[Amount]) > 0

))}

Note: with the 'other' cubes having more dimensions than does Test the current member is used (‘each’), not 'All' so whether you want ‘each’ or ‘All’ you should write this explicitly to be clearer.

You can even filter a list in Cube1 where the filter is a value in one measure compared to another measure in Cube1. This example returns the Products with an amount in the Test cube above zero where this Amount is less than the value in Count.

{FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

(Test.[Posting Measures].[Amount] 0

)}

This example returns all the leaf products that have an Amount in Entity Not Applicable 10% greater than the Amount in Entity Not Found, in the Test3 cube. Not very useful but this was the only example cube we had to work with, but it would be very useful when comparing, say, Actual Q1 Sales with Budget, or finding out which cost centres’ Q2 Costs were 10% higher than Q1. Later in this document we will see how to take that 10% bit and make it a value from another cube, thus allowing administrators, or even end users, to set their own thresholds.

{FILTER(TM1FilterByLevel({TM1SUBSETALL( [Product] )}, 0),

test3.([Entity].[Entity Not Applicable], [Posting Measures].[Amount]) * 1.1 > test3.([Entity].[Entity Not Found], [Posting Measures].[Amount]))}

Filtering for strings uses the same method but you need to use double quotes to surround the string. For example, this query returns products that have a value of “bob” in the Test2 cube against the String1 member from the StringTest dimension. Note that TM1 is case-insensitive.

{FILTER(

{TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test2].([StringTest].[String1]) = "bob"

)}

Filter functions can be nested if required, although the AND or INTERSECT functions may be useful alternatives.

The limit to the number of characters that an MDX subset definition can sometimes be, 256, is too restricting for many data-based queries. When trying to shoehorn a longer query into less characters there are a few emergency techniques that might help: consider whether you need things like TM1FILTERBYLEVEL, 0 (it might well be that the filter would only return members at the leaf level by definition anyway); whether the dimension name prefix can be removed if the member is guaranteed to be unique; remove all spaces; lookup cubes are not for end users so maybe you could shorten some names (cubes, dimension, members) drastically; whether there are alternative functions with shorter syntaxes that return the same result - e.g. an INTERSECT or AND versus a triple FILTER. Finally, if it really is vital to get a long query working then you can build up the final result in stages – i.e. put some of the filtering into Subset1, then use Subset1 as the subject of Subset2 which continues the filtering, etc.

Parent, Children, FirstChild, LastChild, Ancestors, Descendants, DrillDownLevel and TM1DrilldownMember

Parent, Children, FirstChild, LastChild, Ancestors, Descendants, DrillDownLevel and TM1DrilldownMemberChildren returns the set of members one level below a named parent.

{Product.[Demand Loan].Children}

FirstChild returns the… first child one level below a named parent.

{[Product].[Customer Lending].FirstChild}

Returns “Call Participation Purchased”.

LastChild returns the last child one level below a named parent. This is excellent for finding the last day in a month, since they can vary from 28 to 31. Another example is when a consolidation is set up to track a changing set of members (e.g. “Easter”, or “Strategic Customers”).

{[Product].[Customer Lending].LastChild}

Returns “Term Participation Purchased”.

Parent returns the first parent of a given member. If a member has more than one parent, and the full “unique path” to the member is not specified then the first parent according to the dimension order is returned.

{[Product].[MidasTPIS].Parent}

Returns “Bonds”.

{[Product].[External - Bonds].[MidasTPIS].Parent}

Would force TM1 to return the second parent, “External – Bonds”.

Descendants returns the named parent and all of its descendant children – i.e. the hierarchy down to the leaf level:

{Descendants(Product.[Customer Lending]) }

TM1DrilldownMember returns the same thing as descendants:

{TM1DRILLDOWNMEMBER( {[Product].[Customer Lending]}, ALL, RECURSIVE )}

DrillDownLevel just returns the parent and its immediate children:

{DRILLDOWNLEVEL( {[Product].[Customer Lending]})}

DrillDownLevel can be extended with a parameter to say which level to return the members from, rather than the level immediately below, but this doesn’t appear to work in TM1 v9.0 SP2 through to 9.1.1.36.

The common requirement to return a list of just leaf-level descendants of a given consolidated member just needs a level filter applied to the TM1DrillDownMember example above:

{TM1FILTERBYLEVEL({TM1DRILLDOWNMEMBER({[Product].[Customer Lending]},ALL,RECURSIVE)}, 0)}

Or:

{TM1FILTERBYLEVEL({DESCENDANTS(Product.[Customer Lending]) }, 0)}

Ancestors is like a more powerful version of Parent; it returns a set of all the parents of a member, recursively up though the hierarchy including any multiple parents, grandparents, etc.

{[Date].[2006-10-01].Ancestors}

Returns “2006 – October”, “2006 – Q4”, “2006 – H2”, “2006”, “All Dates”.

The Ancestor function returns a single member, either itself (,0) or its first parent (,1), first parent’s first parent (,2), etc. depending on the value given as a parameter.

{ancestor([Date].[2006-10-01], 0)}

Returns “2006-10-01”.

{ancestor([Date].[2006-10-01], 1)}

Returns “2006 – October”.

{ancestor([Date].[2006-10-01], 2)}

Returns “2006 – Q4”.

{ancestor([Date].[2006-10-01], 3)}

Returns “2006 – H2”.

{ancestor([Date].[2006-10-01], 4)}

Returns “2006”.

{ancestor([Date].[2006-10-01], 5)}

Returns “All Dates”.

Lag, Lead, NextMember, PrevMember, FirstSibling, LastSibling, Siblings and LastPeriods

Lag, Lead, NextMember, PrevMember, FirstSibling, LastSibling, Siblings and LastPeriodsLags and Leads are the equivalent of Dnext/Dprev.

{ [Date].[2006-10-03].Lead(1) }

will return 2006-10-04.

Lead(n) is the same as Lag(-n) so either function can be used in place of the other by using a negative value, but if only one direction will ever be needed in a given situation then you should use the correct one for understandability’s sake. Note that they only return a single member so to return the set of members between two members you can use the lastperiods function.

Equally you can use NextMember and PrevMember when you only need to move along by 1 element.

{ [Date].[2006-10-03].NextMember }

Or:

{ [Date].[2006-10-03].PrevMember }

To return the 6 months preceding, and including, a specific date:

{ LastPeriods(6, [Date].[2006-10-03]) }

Or:

LastPeriods(6, [Date].[2006-10-03])

Both of which work because LastPeriods is a function that returns a set, and TM1 always requires a set. Curly braces convert a result into a set which is why many TM1 subset definitions are wrapped in a pair of curly braces, but in this case they are not required.

This will return the rest (or the ones before) of a dimension's members at the same level, from a specified member. Despite its name LastPeriods works on any kind of dimension:

{ LastPeriods(-9999999, [Date].[2006-10-03]) }

Siblings are members who share a common parent. For example, a date of 14th March 2008 will have siblings of all the other dates in March the first of which is the 1st March and the last of which is 31st March. A cost centre under “West Coast Branches” would have a set of siblings of the other west coast branches.

The FirstSibling function returns the first member that shares a parent with the named member. For example:

{[Product].[MidasHCFI].FirstSibling}

Returns “MidasHCBK”.

While:

{[Product].[MidasHCFI].LastSibling}

Returns “MidasHSFI”.

The siblings function should return the whole set of siblings for a given member. TM1 9.0 SP2 through to 9.1.2.49 appear to give you the entire set of members at the same level (counting from the top down) rather than the set of siblings from FirstSibling through to LastSibling only.

{[Product].[MidasHCFI].Siblings}

Filtering by CurrentMember, NextMember, PrevMember, Ancestor and FirstSibling

Filtering by CurrentMember, NextMember, PrevMember, Ancestor and FirstSiblingThis example returns the members that have an Amount value in the Test cube above 18. The [Product].CurrentMember part is optional here but it makes the next example clearer.

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Product].CurrentMember, [Posting Measures].[Amount]) > 18 )}

This query then modifies the previous query slightly to return members where the NEXT member in the dimension has a value above 18. In practice this is probably more useful in time dimensions.

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Product].CurrentMember.NextMember, [Posting Measures].[Amount]) > 18 )}

This can then be improved to returning members where the next member is greater than their amount.

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)},

[Test].([Product].CurrentMember.NextMember, [Posting Measures].[Amount]) >

[Test].([Product].CurrentMember, [Posting Measures].[Amount]) )}

In addition to NextMember, PrevMember can also be used as could lags and leads.

The simple, but unsupported as of 9.1.1.89, Name function allows you to filter according to the name of the member. As well as exact matches you could find exceptions, ‘less-thans’ and ‘greater-thans’, bearing in mind these are alphanumeric comparisons not data values.

This example returns all base members before and including the last day in January 1972.

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL([Date])} ,0)},

[Date].CurrentMember.Name

For example, this could be a useful query even a dimension not as obviously sorted as dates are:

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL([Product])} ,0)},

[Product].CurrentMember.Name

which returns all base members before MidasJ in terms of their name rather than their dimension index.

Parent returns the first parent of a given member:

{ [Product].[Customer Lending].Parent }

Used with Filter you can come up with another way of doing a “children of” query:

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL([Date])} ,0)},

[Date].CurrentMember.Parent.Name = "1972 - January")}

Ancestor() can be used instead of Parent if desired. This example returns base-level product members whose first parents have a value above zero, in other words a kind of family-based suppress zeroes: a particular product might have a value of zero but if one if its siblings has a value then it will still be returned.

{FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].(Ancestor([Product].CurrentMember,0), [Posting Measures].[Amount]) > 0 )}

This example filters the products based on whether they match the Amount value of MidasHCBK.

{FILTER(

{TM1SUBSETALL( [Product] )}, [Test].(Ancestor([Product].CurrentMember,0), [Posting Measures].[Amount]) = [Test].([Product].[MidasHCBK], [Posting Measures].[Amount])

)}

This example uses FirstSibling to filter the list based on whether a product’s value does not match that products’ First Sibling (useful for reporting changing stock levels or employee counts over time, for example, things that are usually consistent).

{FILTER( {TM1FilterByLevel({TM1SUBSETALL( [Product] )}, 0)}, [Test].(Ancestor([Product].CurrentMember,0), [Posting Measures].[Amount]) <> [Test].([Product].CurrentMember.FirstSibling, [Posting Measures].[Amount]) )}

Filtering by Attributes and logical operators

Filtering by Attributes and logical operatorsThis returns members that match a certain attribute value using the Filter function.

{FILTER( {TM1SUBSETALL( [Product] )}, [Product].[Category] = "Customer Lending")}

This example looks at multiple attribute values to return a filtered list:

{

FILTER(

{TM1SUBSETALL( [Product] )},

(

([Product].[Category]="Customer Lending" OR [Product].[Type]="Debit")

AND

([Product].[Internal Deal]<>"No")

)

)

}

Filtering by level, attribute and pattern are combined in the following example:

{TM1FILTERBYPATTERN( {FILTER( TM1FILTERBYLEVEL({TM1SubsetAl([Product])},0),

[Product].[Internal Deal] = "Yes")}, "*ID??") }

Head, Tail and Subsetw

Head, Tail and SubsetwWhere TopCount and BottomCount sort the values automatically and chop the list to leave only the most extreme values, Head combined with Filter works in a similar manner but Head then returns the FIRST members of the filtered set in their original dimension order.

These queries simply return the first and last members of the Product dimension as listed when you hit the ‘All’ button:

{Head ( TM1SubsetAll ( [Product] ) )}

{Tail ( TM1SubsetAll ( [Product] ) )}

This returns the actual last member of the whole Product dimension according to its dimix:

{Tail(TM1SORTBYINDEX( {TM1DRILLDOWNMEMBER( {TM1SUBSETALL( [Product] )}, ALL, RECURSIVE )}, ASC))}

An example of Tail returning the last member of the Customer Lending hierarchy:

{Tail(TM1DRILLDOWNMEMBER( {[Product].[Customer Lending]}, ALL, RECURSIVE ))}

An example of Head returning the first 10 members (according to the dimension order) in the product dimension that have an Amount in the Test cube above zero.

{HEAD( FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].([Posting Measures].[Amount]) > 0 ), 10)}

With both Head and Tail the “,10” part can actually be omitted (or just use “,0”) which will then return the first or last member.

This returns the last (in terms of dimension order, not sorted values) product that had an amount > 0 in the Test cube.

{TAIL( FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].([Posting Measures].[Amount]) > 0 ))}

One example of when this is useful over TopCount or BottomCount – i.e. when sorting the results would be detrimental - would be to return the last day the year when a certain product was sold.

Subset is closely related to Head and Tail, and can actually replicate their results, but is additionally capable of specifying a start point and a range, similar in concept to substring functions (e.g. SUBST) found in other languages, though working on a tuple of objects not strings.

The equivalent of Head, 10 would be:

{Subset ( {Tm1FilterByLevel(TM1SubsetAll ( [Product] ) , 0)}, 1, 10)}

But Subset would also allow us to start partitioning the list at a point other than the start. So for example to bring in the 11th – 20th member:

{Subset ( {TM1FilterByLevel(TM1SubsetAll ( [Product] ) , 0)}, 11, 10)}

Note that asking for more members than exist in the original set will just return as many members as it can rather than an error message.

Union

UnionUnion joins two sets together, returning the members of each set, optionally retaining or dropping duplicates (default is to drop).

This creates a single list of the top 5 and worst 5 products.

{UNION(

TOPCOUNT( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, 5, [Test].([Posting Measures].[Amount]) ),

BOTTOMCOUNT( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, 5, [Test].([Posting Measures].[Amount]) )

)}

To create a list of products that sold something both in this cube and in another (e.g. last year and this):

{UNION(

FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].([Posting Measures].[Amount]) > 0 ) ,

FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test3].([Posting Measures].[Amount], [Entity].[All Entities]) > 0 )

) }

Intersect

IntersectIntersect returns only members that appear in both of two sets. One example might be to show products that performed well both last year and this year, or customers that are both high volume and high margin. The default is to drop duplicates although “, ALL” can be added if these are required.

This example returns leaf Product members that have an Amount > 5 as well as a Count > 5.

{

INTERSECT(

FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].([Posting Measures].[Amount]) > 5 ) ,

FILTER( {TM1FILTERBYLEVEL( {TM1SUBSETALL( [Product] )}, 0)}, [Test].([Posting Measures].[Count]) > 5 )

)

}

Except and Validating Dimension Hierarchies

Except and Validating Dimension HierarchiesThe function takes two sets as its mandatory parameters and removes those members in the first set that also exist in the second. In other words it returns only those members that are not in common between the two sets, but note that members that are unique to the second set are not included in the result set.

Except is a useful function in a variety of situations, for example when selecting all the top selling products except for 1 or 2 you already know are uninteresting or irrelevant, or selecting all the cost centres with high IT costs – except for the IT department.

The simplest example is to have a first set of 2 members and a second set of 1 of those members:

EXCEPT (